VMware vSphere Best Practices

NGX STORAGE

VMware vSphere Best Practices

Technical White Paper

March 2022

Vers 1.2

Always check support.ngxstorage.com for the latest version of the document.

Revisions

|

Date |

Description |

|

April 2021 |

Initial document release version 1.1 for NGX Storage AFA and H Series / NGX Operating Software 1.8.x |

|

March 2022 |

Document version 1.2 for NGX Storage GEN2 Systems / NGX Operating Software 2.1.x |

Table of Contents

1.1– Fibre Channel and iSCSI Support 3

1.1.1- Fibre Channel Configuration 3

1.1.8– Virtual SCSI Controller 8

1.3.1- Round Robin Path Policy 10

1.3.2- The I/O Operations Limit 12

2- VMware Integration and Plug-Ins 14

2.1- VAAI (vStorage APIs for Array Integration) 14

3- Thin Provisioning and Data Reduction 16

5.1- Virtual SCSI Adapter and Virtual Disk 21

5.3- Realtime Automated Storage Tiering (RAST) 23

Executive Summary

This guide provides the best practices for NGX Storage by connecting to ESXi hosts. Our focus is by optimizing NGX Storage features to boost performance and usability of the storage as much as possible. This document is prepared to cover adjustments which are related to the most common use cases. When a storage manager / system administrator reads this guideline, s/he can easily prepare NGX Storage to be connected from ESXi hosts via Fiber Channel, iSCSI or NFS.

In specific environments and use cases, in order to discuss the applicability of your use case, please contact NGX Storage from www.ngxstorage.com.

1- Configuration

1.1– Fibre Channel and iSCSI Support

1.1.1- Fibre Channel Configuration

Fibre Channel (FC) is a high-speed data transfer protocol which enables in-order and lossless delivery of raw block data. In a Storage Area Networks (SAN), FC is primarily used to connect a storage array to hosts (servers). FC networks can be composed of a switched fabric or Point-to-Point network topologies. NGX Storage supports these two network topologies.

Switched Fabric operates SAN Switches in unison and all devices are connected to Fibre Channel Switches. Switched Fabric has some advantages over Point-to-Point topology. Firstly, the switched fabric can scale to ten thousands of ports while Point-to-Point has a limited connectivity. In addition, FC switches manage the state of the Fabric and provide optimized paths via Fabric Shortest Path First (FSPF) data routing protocol. Lastly, multiple pairs of ports may communicate simultaneously in a Fabric and failure of a port does not affect operations of the other ports.

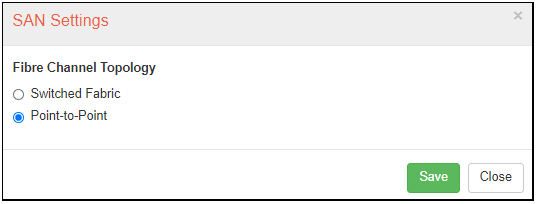

In this point, NGX Storage recommends to choose FC topology according to the size of the SAN. If there are a couple of servers and just one storage, you can prefer Point-to-Point topology to decrease the costs. However, the bigger SAN needs Switched Topology because it provides performance optimization and easy management. To configure topology, follow Maintenance>Services>SAN Settings (Figure 1).

Figure 1: SAN Service Settings

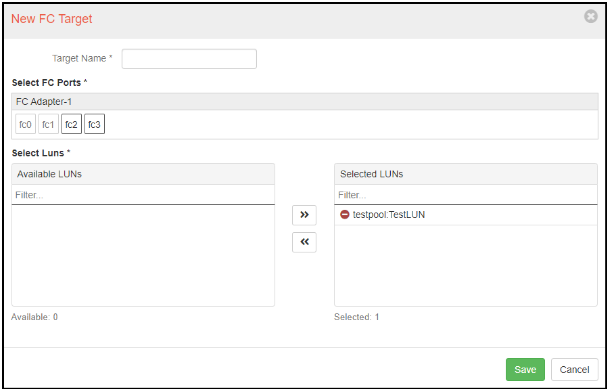

After creating a LUN and choosing the appropriate topology, you should create a new FC target to enable connection between ESXi host and NGX Storage. While creating an FC target, write an appropriate target name that makes you remember which ESXi hosts are connected to it. Then, choose FC ports that are connected to correct ESXi hosts. In fact, redundancy is an important issue. You need to be sure that connections between ESXi hosts and Storage Controllers are redundant for each target. Lastly, match LUNs with an appropriate target that is connected to the correct ESXi hosts. To create a new target, follow SAN > FC Targets > New Target (Figure 2).

Figure 2: Creating FC Target

Number of FC ports in an FC target and number of FC target in an SAN depend upon the system design. System design can change case-by-case in terms of devices, topology, hosts, and even applications. NGX Storage recommends that the customers should consult with NGX Storage system engineers before deciding those metrics.

Known Issues and Limitations:

When starting a virtual machine with an attached RDM disk, scsi LUN reset command sent to the storage. In some cases this issue lead Fibre Channel connectivity lost between devices. For this reason, using Raw Device Mapping (RDM) is not supported at Fibre Channel Fabric. You can use RDM disk with iSCSI only.

1.1.2- iSCSI Configuration

iSCSI (internet Small Computer Systems Interface) is an IP-based storage networking standard that provides block-level access to storage devices by carrying SCSI commands over a TCP/IP network. Although Fibre Channel can provide 32Gbps bandwidth at most, NGX Storage can provide 200Gbps bandwidth with iSCSI at most. In spite of Fibre Channel, iSCSI devices communicate via IPs. In order to assign data IPs to the interfaces, follow Configuration > Network Settings.

NGX Storage does not recommend aggregate interfaces for iSCSI as a best practice. Each interface needs to get an IP and apply multipathing between them. NGX Storage GUI does not allow you to assign IP addresses to the management and data interfaces in the same subnet. They should be in different subnets as a best practice.

In data interfaces, you should assign at least two IP addresses to provide multipath redundancy. Without multipath LUN access, VMware can trigger APD and you can lost your datastores, even they are available at the storage. Do not use single path LUNs at the production environment.

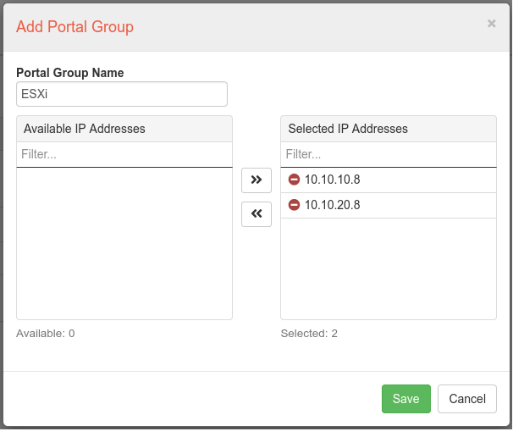

iSCSI target needs a portal group which consists of data IPs. Portal groups should be specified by considering groups of ESXi hosts which are connected to related LUNs. For example, there are a cluster of ESXi hosts working together on specific LUN or LUNs. A portal group should meet ESXi hosts appropriately with IPs on the same subnet. To create a new portal group, follow SAN > Portal Groups > Add Group (Figure 3).

Figure 3: New Portal Group

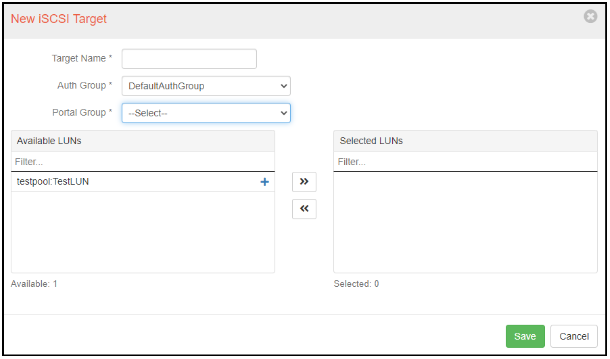

Network settings and portal groups are prerequisites for iSCSI targets. If you are sure that they are appropriate, adding an iSCSI target is so simple. To manage it, follow SAN > iSCSI Targets > New Target (Figure 4).

Figure 4: New iSCSI Target

In this stage, you should specify a suitable iSCSI target name, select the correct portal group and assign LUN or LUNs that you prefer to map with these ESXi hosts. Afterward, iSCSI targets will appear from ESXi hosts unless network configuration and portal groups are wrong

1.1.3– Target Port Limits

NGX AFA and H Series Storage supports an unlimited number of FC/iSCSI host initiators. However, to ensure an optimal performance, saturate the bandwidth without congestion.

1.1.4– Jumbo Frame

ESXi hosts can access NGX Storage by enabling Jumbo Frame. While creating link aggregation in iSCSI network, enable Jumbo Frame which is 9000 MTU (in NGX Graphical User Interface: Configuration > Network Settings > Aggregation).

As a best practice, NGX Storage recommends that MTU size should be kept in default size which is 1500 MTU. If it needs to transmit large blocks of data (Jumbo Frame), all Ethernet components in the iSCSI data path must support jumbo frames.

1.1.5– Disable Delayed Ack

If a high network congestion occurs, it can cause iSCSI transfer latency which exceeds acceptable levels. To prevent this, VMware recommends to disable Delayed ACK. To solve this problem in ESXi host side, follow the steps provided in the VMware Knowledge Base article.

Disabling Delayed ACK in ESX/ESXi Hosts (1002598) (vmware.com)

1.1.6– Datastore Size

ESXi 7.0U1 (Latest version of vSphere) supports a maximum datastore size of 64TB. However, to enable optimal performance, more manageable size is recommended. In most environments, from 1TB up to 4TB datastore capacities work smoothly. As a best practice, NGX Storage recommends not to exceed this limit.

Note: The appropriate size should be based upon the IOPS and capacity requirements for specific environments.

1.1.7– VMs/Datastore

ESXi 7.0U1 supports a maximum of 2048 powered-on virtual machines per each VMFS datastore. In most environments, there are 15-20 active VMs in a datastore. As best practice, NGX Storage recommends that a datastore can have from 15 – 20 VMs to keep performance optimal.

Note: The appropriate number should be based upon the IOPS and capacity requirements for specific environments.

1.1.8– Virtual SCSI Controller

When creating a new virtual machine, VMware automatically selects a virtual SCSI controller in terms of the operating system being installed in the VM. NGX Storage recommends to maintain the automatically selected virtual SCSI controller as a best practice.

1.1.9– VMFS

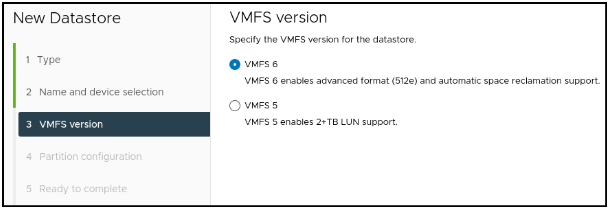

NGX Storage recommends using the latest supported version of VMFS that ESXi hosts permit. For ESXi 5.x through 6.0, use VMFS-5, For ESXi 6.5 and later, use VMFS-6. In ESXi 7.0U1, VMFS-6 is chosen as default (Figure 5).

Figure 5: VMFS Version Selection

There is no method to upgrade VMFS-5 datastore to VMFS-6 datastore in-place. It is recommended that compose a new datastore formatted by VMFS-6, then by using vMotion feature, migrate all VMs to the VMFS-6 datastore. After migration is accomplished, remove the old VMFS-5 datastore from ESXi host.

1.2- Timeout

1.2.1-Disk Timeout

Disk timeout is a significant factor to keep applications alive in case of unexpected or expected failures, rebooting and so on. In most applications and operating systems, the default SCSI timeout is 30 seconds. To ensure uptime, NGX Storage recommends increasing SCSI timeout to 120 seconds.

According to your guest OS, examine Vmware articles below. Follow the steps to change the values as the best practice.

Set Disk Timeout on Windows Guest OS

Set Disk Timeout on Linux Guest OS

1.2.2- iSCSI Timeouts

Advanced timeout settings for iSCSI initiators on ESXi host should be configured to prevent datastore or iSCSI path losses. Advanced timeout settings are LoginTimeout, RecoveryTimeout, and No-OpTimeout.

LoginTimeoutspecifies time in seconds the initiator will wait for the login response to finish. Its default value is 5 seconds.

RecoveryTimeout specifies the amount of time, in seconds, that can lapse while a session recovery is performed. If the timeout exceeds its limit, the iSCSI initiator ends the session. Its default value is 10 seconds.

No-Op Timeout specifies the amount of time, in seconds, that can lapse before your host receives a NOP-In message. The iSCSI target sends the message in response to the NOP-Out request. When the no-op timeout limit is exceeded, the initiator ends the current session and starts a new one. Its default value is 10 seconds.

For more you can review the passage from Vmware Article Library below.

Configuring Advanced Parameters for iSCSI

NGX Storage suggests the customers to change those values to the best parctices. LoginTimeout, RecoveryTimeout and No-Op Timeout values should be changed to 20, 40, and 30 seconds respectively.

LoginTimeout : 20

RecoveryTimeout : 40

No-Op Timeout : 30

In order to change those values in a ESXi host, follow the steps below.

-

Select the host from vCenter.

-

Under the Configure tab, select Storage Adapters

-

From the list, find the correct iSCSI Initiator Adapter

-

Click Advanced Optionstab and then click Editbutton

-

From the list, you can change 3 timeout values (LoginTimeout, RecoveryTimeout, and No-Op Timeout)as the best practices.

1.3- Multipathing

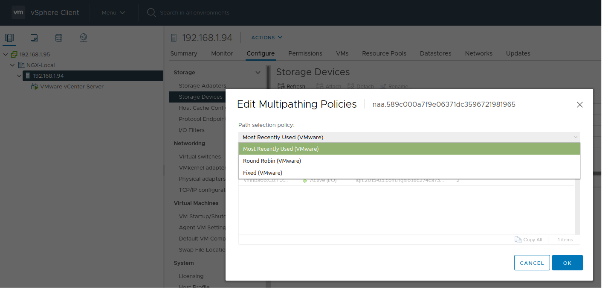

In order to keep a constant connection between ESXi host and storage, VMware supports multipathing. To enable multipathing, LUNs should be served to multiple paths to the host server. ESXi 7.0U1 (Latest version of vSphere) gives three path policy options such as Most Recently Used, Round Robin and Fixed and as a default, it chooses Most Recently Used one.

To keep performance optimal and prevent path-loss, NGX Storage makes choosing Round Robin compulsory.

1.3.1- Round Robin Path Policy

Round Robin policy enables automatic path selection algorithms rotating through all available paths. While NGX Storage always performs i/o operations in active/active between its controllers, other active/passive arrays also can work with Round Robin policy. However, the automatic path selection algorithm rotates through all active paths only.

After connecting ESXi host with LUNs over NGX Storage, change path selection policy to Round Robin. For the steps, you can visit the following VMware Docs Library (Figure 6).

Change Path Selection Policy (VMware)

Figure 6: Multipathing Policies

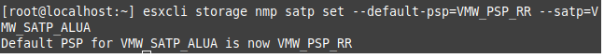

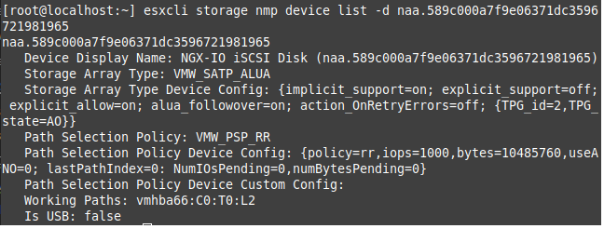

By using a shell, Round Robin can be applied shortly. You can use the following command to apply Round Robin path selection policy over ESXi (Figure 7).

# esxcli storage nmp satp set --default-psp=VMW_PSP_RR --satp=VMW_SATP_ALUA

Figure 7: Making Round Robin Multipathing Default

You can use below command to apply Round Robin path selection policy for only NGX Storage devives.

# for i in `esxcli storage nmp device list | grep '^naa.589c'` ; do esxcli storage nmp device set --device $i --psp VMW_PSP_RR; done

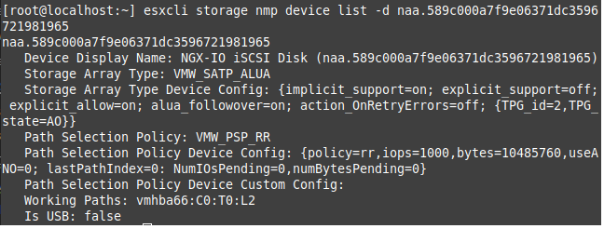

After applying Round Robin path selection policy, check if it is correct or not by using the following command (Figure 8)

# esxcli storage nmp device list

Figure 8: Checking Multipathing Policy from CLI

1.3.2- The I/O Operations Limit

In the Round Robin path selection policy, the paths are continuously changing which raises another question: how many IOPS should be transmitted in one time from one path?

Tuning the I/O operations limit of Round Robin can raise performance. When the Round Robin path selection policy is set, the IOPS limit is set to 1000 as a default. As a best practice, NGX Storage recommends to set IOPS limit to 1 to keep performance optimal.

In order to set the IOPS limit to 1, open the shell and run the following command.

# for i in ` esxcfg-scsidevs -c | awk '{print $1}' | grep naa.589c0 ` ; do esxcli storage nmp psp roundrobin deviceconfig set --type=iops --iops=1 --device=$i ; done

Afterwards, check the IOPS limits to 1. You will see the similar output below (Figure 9).

Figure 9: Changin I/O Operations Limit from 1000 to 1

1.4- NFS Support

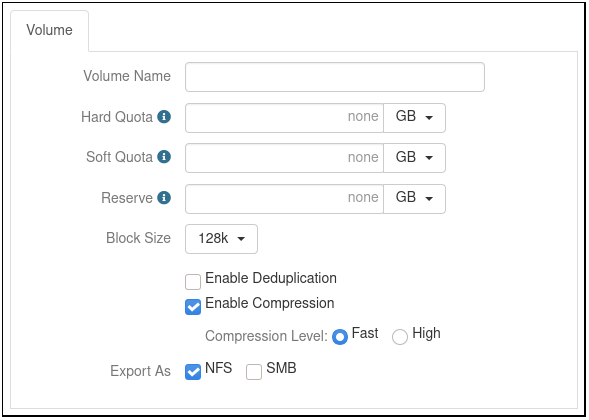

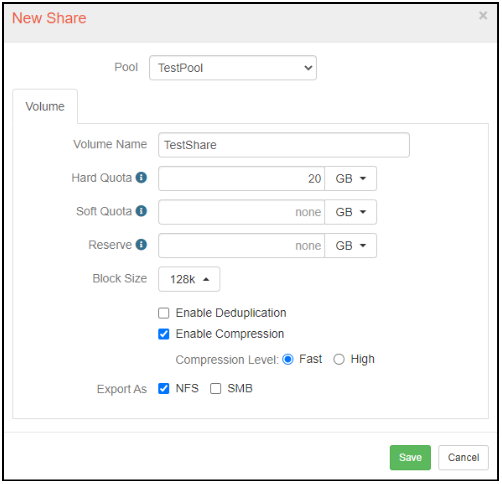

Network File System (NFS) is a distributed file system protocol allowing a client (host) to access files on a network storage. For an ESXi host, NGX Storage Array can support both FC/iSCSI and NFS at the same time. It means that both iSCSI LUN and NFS Share can be mounted on the same IP/interface. When creating an NFS share, Hard Quota must be defined. If you define Soft Quota which needs to be equal or less than Hard Quota. Soft Quota is the space only occupied by data. Snapshots are not included in Soft Quota. Reserve guarantees the amount of space will be reserved for the dataset and its snapshots. In other words, Reserve enables thick provisioning. Amounts of Quotas and reserves need to be determined after needs analysis (Figure 10).

Recommended NFS Settings for VMware with NGX Storage :

NFS.DiskFileLockUpdateFreq = 120

NFS.LockRenewMaxFailureNumber = 60

https://kb.vmware.com/s/article/1007909

Connect the datastore always with NFSv3 .

Figure 10: NFS Share Creation

By composing a new NFS share, as best practice, choose block size as 128k which is selected in default. NGX Storage recommends enabling compression in fast mode regardless of the data. NGX Storage guarantees that compression in fast mode will never decrease the system’s performance and compress data as much as possible.

2- VMware Integration and Plug-Ins

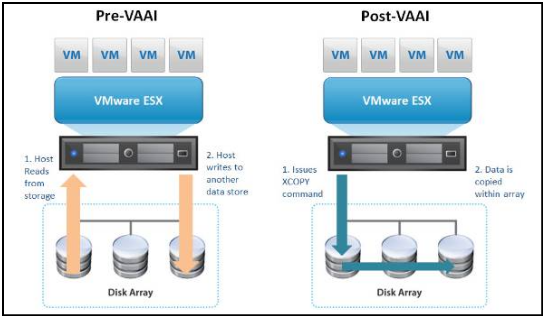

2.1- VAAI (vStorage APIs for Array Integration)

VAAI is a VMware plug-in which integrates ESXi host with the storage. It boosts ESXi host’s performance by assigning operations such as clone, snapshot and copy to the storage. If there would be no VAAI, clone operations are managed by VMkernel Data Mover. This causes congestion in ESXi hosts and increases costs and probability of error. NGX Storage supports VAAI with its all command set. Thus, all of NGX Storage’s product line undertakes storage traffic of ESXi host and solves storage network congestion problems in ESXi host. Supported VAAI features by NGX Storage are listed below.

1- Atomic Test & Set (ATS)

2- Clone Blocks/Full Copy/XCOPY

3- Zero Blocks/Write Same

4- Block Delete in ESXi 5.x and later hosts.

Figure 12: vStorage APIs for Array Integration

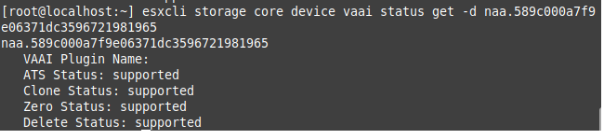

There is no need to install any plug-in and something in order to benefit from VAAI (Figure 12). NGX Storage automatically supports VAAI in its storage arrays. In ESXi host, check if VAAI is supported or not by using the following command (Figure 13).

# esxcli storage core device vaai status get

You will get an output similar to the following.

Figure 13: Checking VAAI Support

2.1.1- VAAI UNMAP Command

UNMAP enables VMFS to communicate with the storage target that definite blocks are no longer used on LUN backing a datastore. For example, after deletion of a VM, if its space is no longer used by vSphere, UNMAP command runs to reclaim automatically space back to the storage array’s resource pool.

NGX Storage supports UNMAP command and starts it without the involvement of the storage administrator. In ESXi 7.0U1 version, UNMAP process is accomplished at 100MB/s reclaiming rate as a default. NGX Storage recommends to keep this rate as a best practice.

3- Thin Provisioning and Data Reduction

3.1- Thin Provisioning

Thin provisioning is a mechanism which allows FC/iSCSI LUNs to be allocated to ESXi hosts on a just-enough and just-in-time basis. Thin provisioning aims to optimize utilization of available storage by allocating blocks of data on-demand. Thanks to thin provisioning, unused spaces (not written to) have not remained. Traditional method is called “thick” or “fat” provisioning which does not allow allocated space to be mapped to the other hosts even if never used by itself.

There is no performance penalty when using thin provisioning with NGX Storage systems. Despite this fact, some products might require guaranteed or fixed provisioning, and it is wise to follow these requirements to avoid support problems. Additionally, you should monitor your actual disk usage on the storage to avoid over-size provisioning. When the storage is over provisioned, and yo run out of real storage capacity your entire system may halt or fail.

3.2- Deduplication

Deduplication eliminates duplicate copies of repeating data in a FC/iSCSI LUN and NFS Share to ESXi hosts. In the deduplication process, unique chunks of data are identified and stored in one time. Deduplication is valuable for both efficient utilization of storage space and data transfer over network (Figure 14).

NGX Storage supports deduplication for FC/iSCSI LUNs and NFS Shares to be allocated to ESXi hosts. However, if the data is not repetitive, the deduplication is inefficient and leads to intensive back processing and loss of performance. Therefore, before activating deduplication features we are recommending analysing your data type

As a general rule we are not recommending deduplication for VM environments and for the performance intensive applications. Instead of deduplication you can enable compression for data reduction.

Figure 14: Deduplication

3.3- Compression

Compression is the process of encoding data using fewer bits than the original representation. NGX Storage supports compression in fast mode and high mode. Regardless of data type, compression in fast mode is strictly recommended. NGX Storage assures that compression in fast mode can compress data at a rate; but it never loses performance in NGX Storage Arrays (Figure 15).

Compression in high mode is special in terms of data type. Before activating this mode, consult with NGX Storage engineers to analyze data efficiency and performance tradeoffs.

Figure 15: Compression

4- Data Protection

Data protection in a storage array is a vital issue. To ease and automate data protection, various protection mechanisms are used by NGX Storage. Those are snapshot and synchronous / asynchronous replication.

4.1- Snapshot

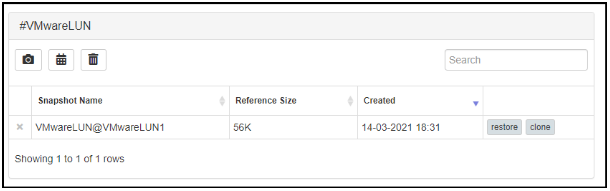

Thanks to snapshot, recovery point objective (RPO) can be established which assures if a virtual machine crashes or a datastore is losen, there is a point enabling storage manager to restore the system to that point. NGX Storage supports limitless number of snapshots; however, it is recommended that for each LUN or Share, a manageable number of snapshots need to be taken which should not reduce the system’s performance. The manageable number is changeable according to the LUN or Share’s capacity as case-by-case.

Figure 16: Taking Snapshot

NGX Storage enables storage managers to schedule snapshots in terms of hour / day / week / month / year. If necessary, a snapshot can be cloned which restores it by keeping the original LUN/Share. Snapshot is a data protection mechanism; however, NGX storage does not recommend to use only snapshot to protect data. Storage managers need to use taking snapshots besides other data protection techniques. Snapshots can be found under Storage Tools > Snapshot Manager (Figure 16).

4.2- Replication

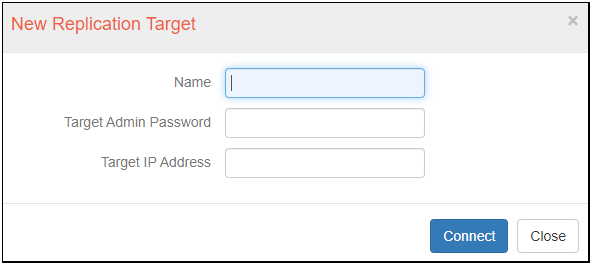

NGX Storage offers a replication feature to transfer data synchronously or asynchronously to one or more targets. Replication target needs to be a secondary array belonging to NGX Storage Brand. If this secondary array is located in a different geographical site, it can be used as a disaster recovery center

Figure 17: Creating Replication Target

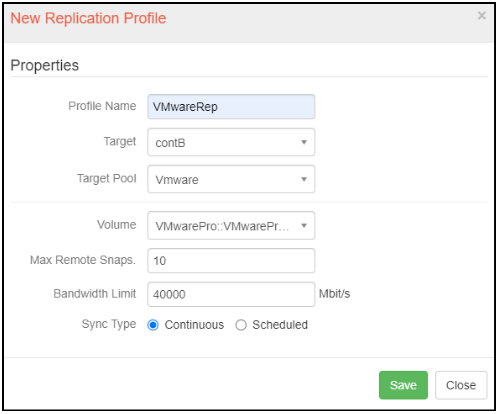

When creating a replication target from the production site, it is required to insert a target name that makes you remember it, target admin password and target IP address. Replication targets can be found under Storage Tools > Replication Targets (Figure 17). After defining a replication target, it needs to create a replication profile which specifies target pool, volume which target pool is replicated to, max. remote snapshots, bandwidth and sync type (Figure 18).

Throughput between two replication arrays can be 40.000 Mbit/s at the most. NGX Storage recommends analyzing the need properly and establishing a channel with appropriate bandwidth for minimizing the costs.

Synch type enables storage managers to replicate near-synchronously if chosen continuous or to replicate asynchronously if chosen scheduled. For example, a disaster recovery site is established, it needs to replicate a production site to DRS synchronously. On the other hand, if a storage manager wants to transfer a data backup to a third site, it can be scheduled to transfer data asynchronously. Therefore, it needs to be adjusted in case-by-case.

Figure 18: Replication Profile

5- Performance Tuning

5.1- Virtual SCSI Adapter and Virtual Disk

ESXi 4.0 or later versions enable virtual storage adapters as a default. To optimize the performance, VMware Paravirtual SCSI Adapter (PVSCSI) is recommended which is a high-performance storage adapter with lower CPU utilization.

In order to activate VMware Paravirtual SCSI Adapter (PVSCSI), the guest operating system and virtual hardware version needs to support it. Further details and configurations can be found in the Vmware Knowledge Library below.

Configuring disks to use VMware Paravirtual SCSI (PVSCSI) controllers (1010398)

VMware enables three virtual disk options such as thick provision lazy-zeroed, thick provision eager-zeroed and thin provision. Thick provisioned virtual disks have all space allocated at time of creation. Lazy-zeroed formats the allocated space and makes it ready to write. In ESXi 7.0U1, it is the default option. Eager-Zeroed formats the allocated space and writes zeros on all space. Creation of Eager zeroed takes much time; however, its performance is very high and more secure than lazy-zeroed.

On the other hand, thin provisioning virtual disks are the best choice to save datastore space. Because of thin provisioning, more virtual disk spaces can be allocated than physical disk spaces which is called overprovisioning. In the case of overprovisioning, the storage manager can add more physical disks to overcome it. Moreover, one needs to keep his eyes on the amount of free space because virtual machines will be able to be unusable.

5.2- Volume Settings

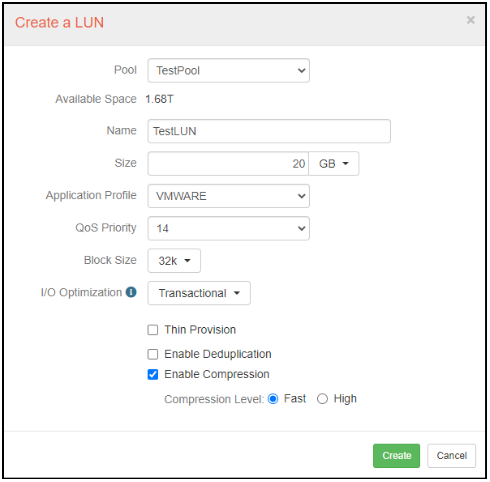

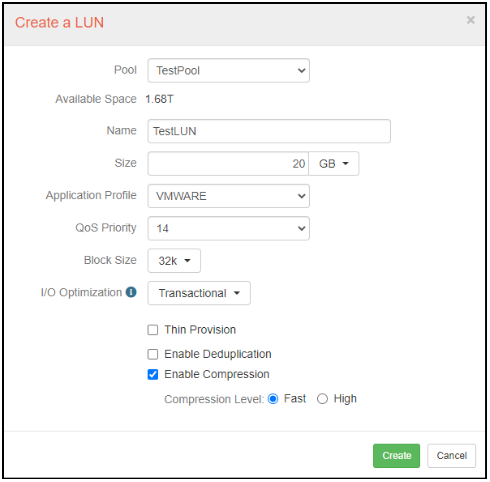

FC/iSCSI targets need to match LUNs under Disk Pool. While creating a LUN, follow SAN > LUNs > Create a LUN from NGX Storage GUI. After defining name and size, as a best practice, choose VMWARE as application profile for ESXi hosts. Those values are predefined and NGX Storage recommends not to change QoS Priority and Block Size values automatically set. These predefined values are according to the VMware best practice guide.

I/O Optimization is automatically set as Transactional. However, if a disk pool includes in flash ssd, write cache disks and so on, it is recommended that transactional I/O optimization needs to be kept. If a disk pool is composed purely of rotating disk drives (HDDs), NGX Storage recommends to change I/O Optimization as Sequential. (Figure 20)

Figure 19: I/O Optimization

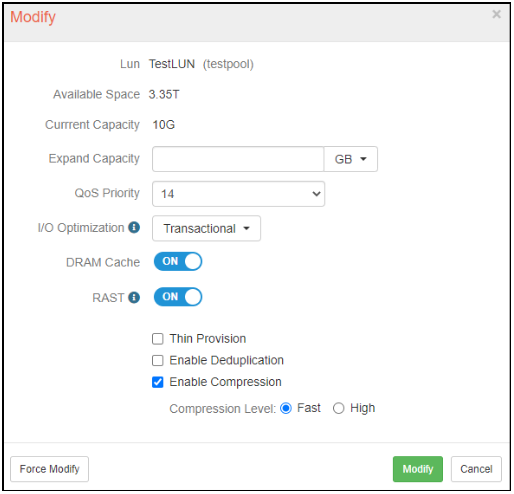

5.3- Realtime Automated Storage Tiering (RAST)

NGX Realtime Automated Storage Tiering (RAST) automatically moves active data to high performance storage tiers and inactive data to slower low cost storage tiers. NGX Storage recommends to always open this feature because it boosts the performance of storage arrays for hot datasets. RAST enables storage to exploit disks’ performance at most; so IOPS and throughput values increase at best (Figure 21).

Figure 20: Realtime Automated Storage Tiering

COPYRIGHT

© 2022 NGX Teknoloji A.Ş. (NGX Storage). All rights reserved. Printed in the Turkey. Specifications subject to change without notice. No part of this document covered by copyright may be reproduced in any form or by any means-graphic, electronic, or mechanical, including photocopying, recording, taping, or storage in an electronic retrieval system-without prior written permission of NGX Storage.

Software derived from copyrighted NGX Storage material is subject to the following license and disclaimer:

THIS SOFTWARE IS PROVIDED BY NGX Storage “AS IS” AND WITHOUT ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE, WHICH ARE HEREBY DISCLAIMED. IN NO EVENT SHALL NGX Storage BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

NGX Storage reserves the right to change any products described herein at any time, and without notice. NGX Storage assumes no responsibility or liability arising from the use of products described herein, except as expressly agreed to in writing by NGX Storage. The use or purchase of this product does not convey a license under any patent rights, trademark rights, or any other intellectual property rights of NGX Storage.

TRADEMARK

NGX Storage and the NGX Storage logo are trademarks of NGX TEKNOLOJI A.Ş. Other company and product names may be trademarks of their respective owners.